- #INSTALL APACHE SPARK CLUSTER INSTALL#

- #INSTALL APACHE SPARK CLUSTER DRIVER#

- #INSTALL APACHE SPARK CLUSTER DOWNLOAD#

- #INSTALL APACHE SPARK CLUSTER MAC#

- #INSTALL APACHE SPARK CLUSTER WINDOWS#

And they allocate the resource across all applications. The above-mentioned cluster manager types are specifically used to run on the cluster.

#INSTALL APACHE SPARK CLUSTER DRIVER#

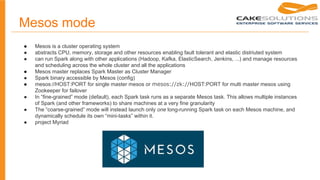

In coordination with the SparkContext object in the main program, (called the driver program), on the cluster, the Spark applications are run as the independent sets of processes. An application is either an individual job or DAG of a graph.įor automating deployment, it is an open-source system, for scaling and management of containerized applications. Pre-application Application master and Global resource manager (AM and GRM) are the goals to be achieved. Into different daemons, the YARN bifurcates the functionality of job scheduling and resource management. In the year 2012, YARN became the sub-project of Hadoop. Many physical resources in Mesos are clubbed into a single virtual source. One physical resource in virtualization divides into many virtual resources. The reverse of virtualization is Apache Mesos.

#INSTALL APACHE SPARK CLUSTER MAC#

Apache Meso is used by companies like Twitter and Airbnb and is run on Mac and Linux. For Hadoop and bigdata clusters, it is a resource management platform. As this is a node abstraction, this decreases, for different workloads, the overhead of allocating a specific machine. The existing resource of machines and nodes in a cluster can be clubbed together by the Apache Mesos. In a large-scale cluster environment, this is helpful for deployment and management. Apache Mesosīy dynamic resource sharing and isolation, Mesos is handling the load of work in a distributed environment. In a clustered environment, this is often a simple way to run any Spark application.

#INSTALL APACHE SPARK CLUSTER WINDOWS#

This can run on Linux, Mac, Windows as it makes it easy to set up a cluster on Spark. This mode is in Spark and simply incorporates a cluster manager. Cluster Manager Standalone in Apache Spark system Let us discuss each type one after the other.

clustering How does Apache Spark Cluster work?Ī cluster manager is divided into three types which support the Apache Spark system.

Now you should see the below message in the console.Hadoop, Data Science, Statistics & othersįor the K-means data clustering algorithm, this is the implementation API. In case if you still get errors during the running of the Spark application, please restart the IntelliJ IDE and run the application again. This should display below output on the console. Finally Run the Spark application SparkSessionTestĥ. Some time the dependencies in pom.xml are not automatic loaded hence, re-import the dependencies or restart the IntelliJ.Ĥ. Val sparkSession2 = SparkSession.builder() Our hello world example doesn’t display “Hello World” text instead it creates a SparkSession and displays Spark app name, master and deployment mode to console. Now create the Spark Hello world program. Create Spark Hello world Application on IntelliJġ. Add Spark Dependencies to Maven pom.xml Fileĩ. Now delete the following from the project workspace.Ĩ.

#INSTALL APACHE SPARK CLUSTER DOWNLOAD#

Now, we need to make some changes in the pom.xml file, you can either follow the below instructions or download the pom.xml file GitHub project and replace into your pom.xml file. Choose the Scala version 2.12.12 (latest at the time of writing this article) 6. From the next window select the Download option andĥ. Select Setup Scala SDK, it prompts you the below window,Ĥ. IntelliJ will prompt you as shown below to Setup Scala SDK.Ģ.After plugin install, restart the IntelliJ IDE.

#INSTALL APACHE SPARK CLUSTER INSTALL#

0 kommentar(er)

0 kommentar(er)